Current Comparison of RISC and CISC Architectures

July 10, 2024

Hello, future reader. You should feel lucky to have found this first article I have published. Because, although the topic of RISC and CISC that we are going to focus on together may seem a bit complicated, when we look at a little bit of history and dig a little bit deeper, you will see that the complex ball of information in our minds will quickly unravel and the question marks will disappear.

Prepare any caffeinated drink, get into a comfortable but not too sleepy position and clear your mind. If you're ready, here we go.

● ● ●

The smiling uncle you see in the picture above is David Andrew Patterson, not Walter White. He is a highly successful computer scientist who coined the term RISC (Reduced Instruction Set Computing). He worked as a professor at the University of California between 1976 and 2016, and later signed with Google.

He made significant contributions to the RISC architecture at the Berkeley RISC project, which he led while working as a professor. David Andrew Patterson and his team adopted an approach to the design of RISC processors that used simpler instructions instead of a complex instruction set. This allowed the processors to run faster and more efficiently, and paved the way for the development of the MIPS (Microprocessor without Interlocked Pipeline Stages) processor architecture after the Berkeley RISC project. The MIPS architecture has become one of the leading examples of RISC design and has been popularized by many different manufacturers.

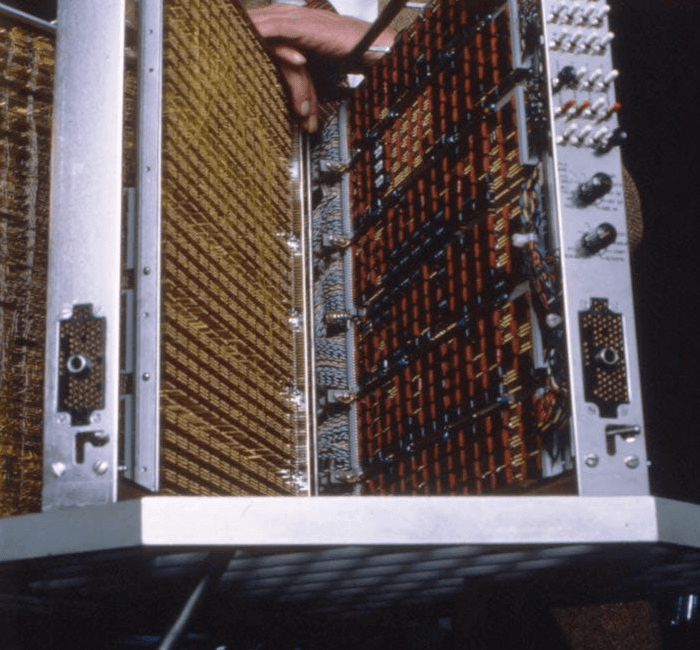

IBM 801

IBM 801 was the first RISC system designed. This system, which was started to be designed by John Cocke in 1975, started to be produced in 1980. It was produced as a result of statistics collected by the company from its customers. Customers were complaining that the registers in the processors were running out too quickly. The idea that using more registers would give better performance emerged and the design process of the IBM 801 began.

Maybe you are already familiar with it. There used to be telephone exchanges and dozens of people working inside these exchanges. They were in charge of connecting the telephone lines together. They would take the line out of one place and put it in another, and in this way they would manually switch the calls.

This first designed 24-bit version of the IBM 801 was capable of switching 300 phone calls per second, which corresponds to more than 1 million calls per hour. The fact that a single processor can do the work of hundreds or even thousands of people, all by itself, almost by commanding electrons, is not only revolutionary for humanity, but also extremely exciting and inspiring.

The next 32-bit design of the IBM 801 was produced in 1981, one year after the 24-bit design. This processor found use in high processing power operations, IBM's peripheral interfaces and channel controllers.

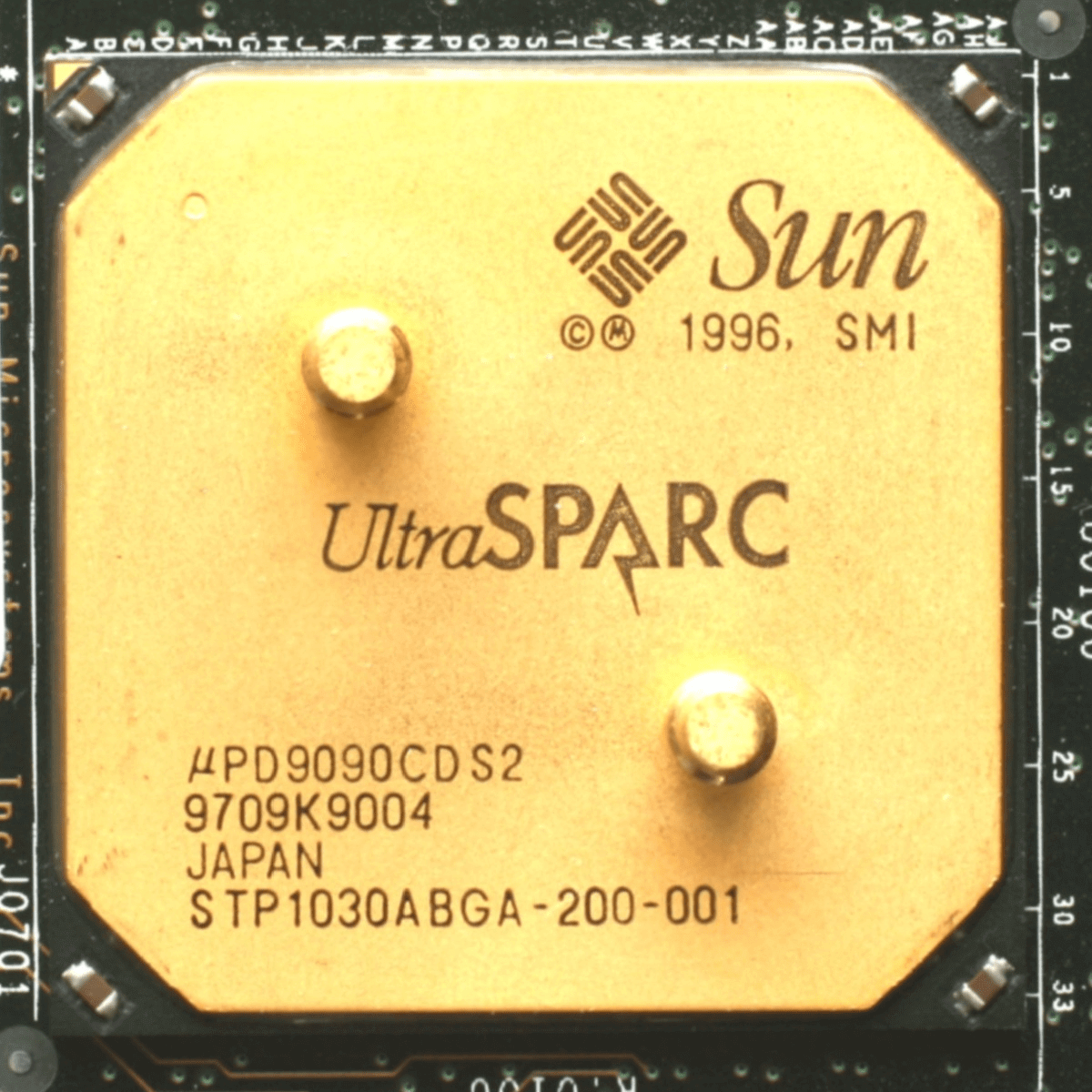

Sun UltraSPARC

Sun Microsystems is known to those of you who are interested in the Java programming language. The reason why we don't see its name or logo much now is that Java has been acquired by Oracle. Java, which was created by Sun Microsystems, is still being developed by Oracle today, but that is not what I want to talk about. What I want to talk about is the ultimate success of SPARC processors.

This was such a success that the US government's Committee on Innovations in Computing and Communications (Funding a Revolution: Government Support for Computing Research by Committee on Innovations in Computing and Communications, 1999) credited the success of the SPARC system for the acceptance of the viability of the RISC concept. The success of SPARC revitalized interest in IBM, which introduced new RISC systems in 1990 and RISC processors in 1995, laying the foundation for a $15 billion server industry.

SPARC processors owe their success to many different reasons. First, SPARC had an open processor architecture and an open standard. It offered compatible and flexible production options between different manufacturers. It had very good performance for its time. It was often preferred in large server systems and databases. It supported many operating systems and provided high reliability. All this offered more flexibility for software developers and made it easier for applications to run smoothly on SPARC processors.

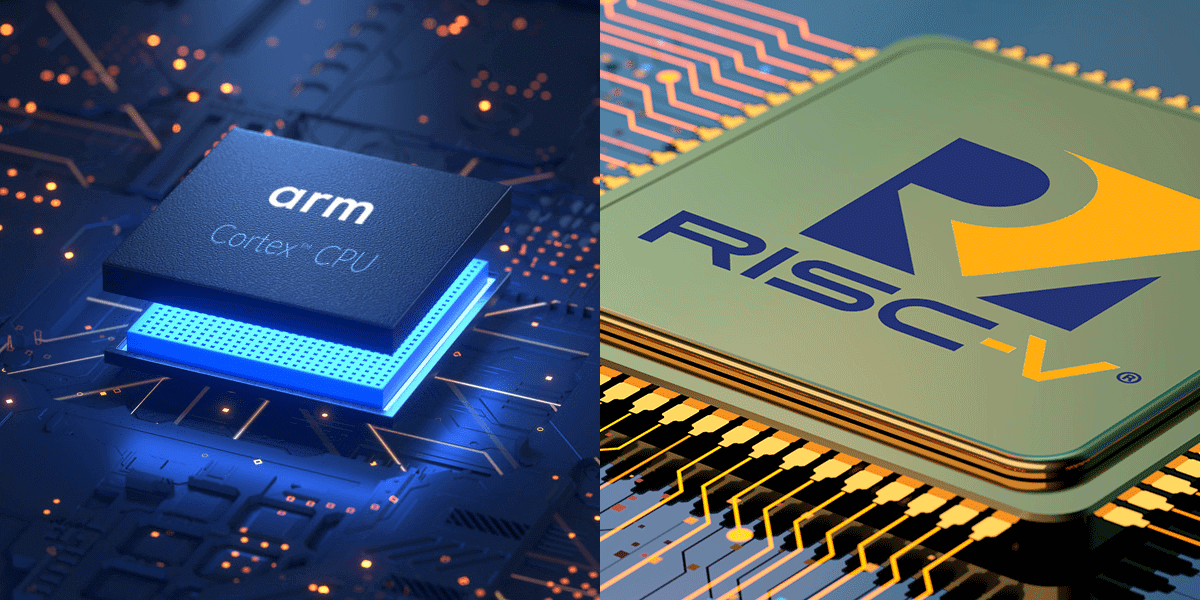

ARM and RISC-V

Let's take a look at today. ARM (Advanced RISC Machines) architecture is used in mobile device processors such as Qualcomm Snapdragon, general purpose computer processors such as the Apple M series, and large server processors such as IBM Power.

There are many different reasons for this. We will be examining the working principle of the RISC architecture in more detail in a moment, but to give a brief overview; RISC processors have high power savings, mainly due to the simplicity of the instruction set. It is preferred for mobile devices and portable computers to be used for longer periods of time and for servers such as IBM Power to operate at a lower cost.

I don't want to get into the working principle of RISC without talking about RISC-V, which was introduced in August 2014. Designed at the University of California, the birthplace of RISC, RISC-V is an open source set of CPU design standards. Anyone can develop or contribute to the development of a processor based on RISC-V. Because of its wide range of uses, modular design and low cost, RISC-V is widely used in research, education and industry. Because other processor design standards are often limited by copyright or patents, RISC-V is affectionately referred to among technology lovers as "The Linux of processors."

● ● ●

RISC Architecture

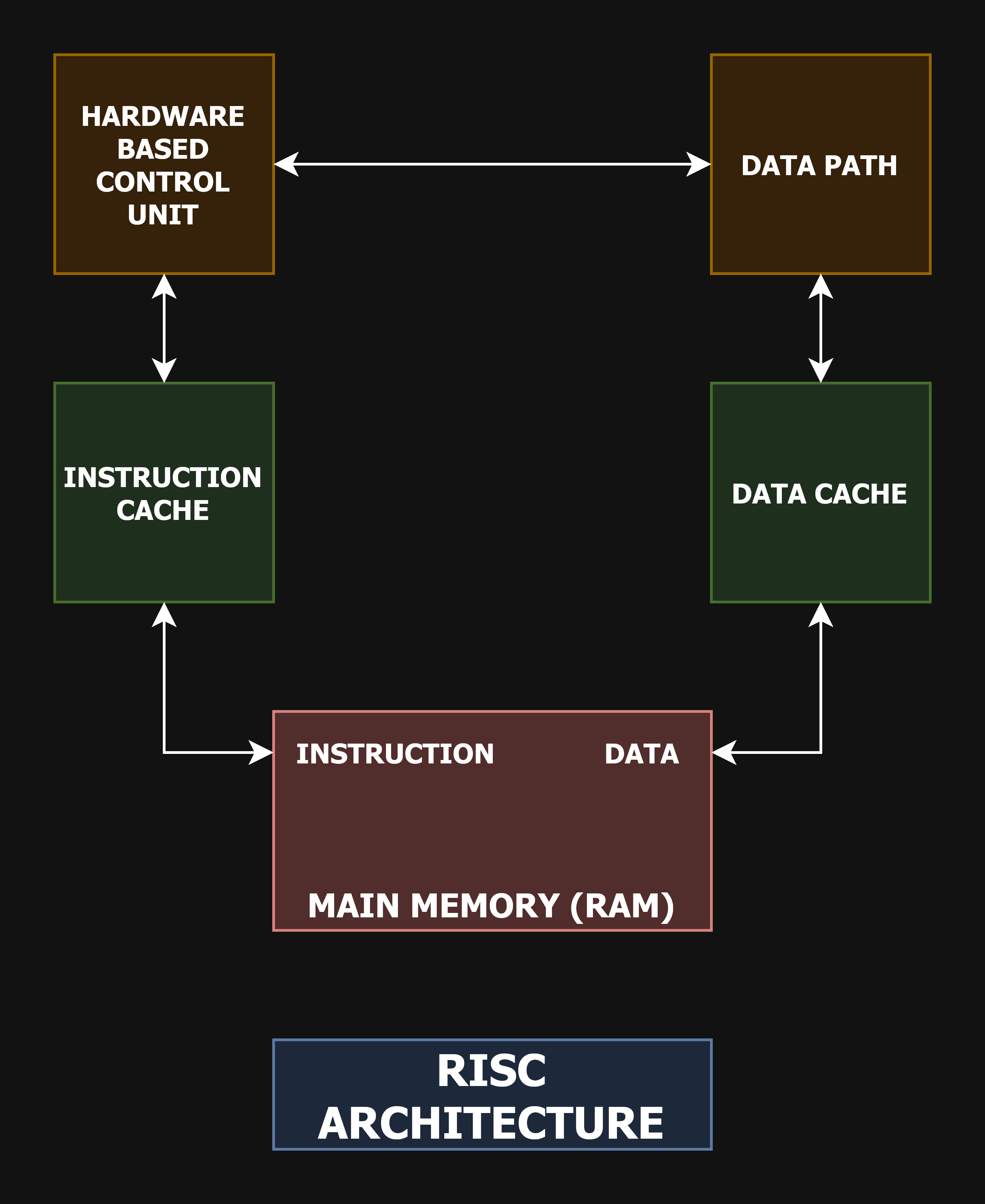

Now that we know the history of the RISC architecture, I think we can talk about its working principle. Before we continue reading, I suggest you take a look at the diagram above.

What was the first thing you noticed about the diagram above? The first thing I personally noticed is that main memory is connected to both the instruction cache and the data cache. This is one of the most fundamental features that the RISC architecture uses to achieve its targeted performance.

The Instruction Cache allows the processor to have quick access to instructions. The processor can retrieve the instructions stored in this cache without having to wait for the program's next instructions. This allows the program to run faster. Data Cache allows the processor to access data quickly. In particular, it greatly speeds up access to frequently used data, which improves processor performance and efficiency.

When we talked about the history of the RISC architecture, you read that this architecture is preferred in mobile devices and servers because of its low power consumption. One of the reasons for the low power consumption of this architecture is the separation of the Instruction Cache and the Data Cache. The processor accesses the Instruction Cache when it needs an instruction and the Data Cache when it needs data, resulting in a significant reduction in access frequency and time.

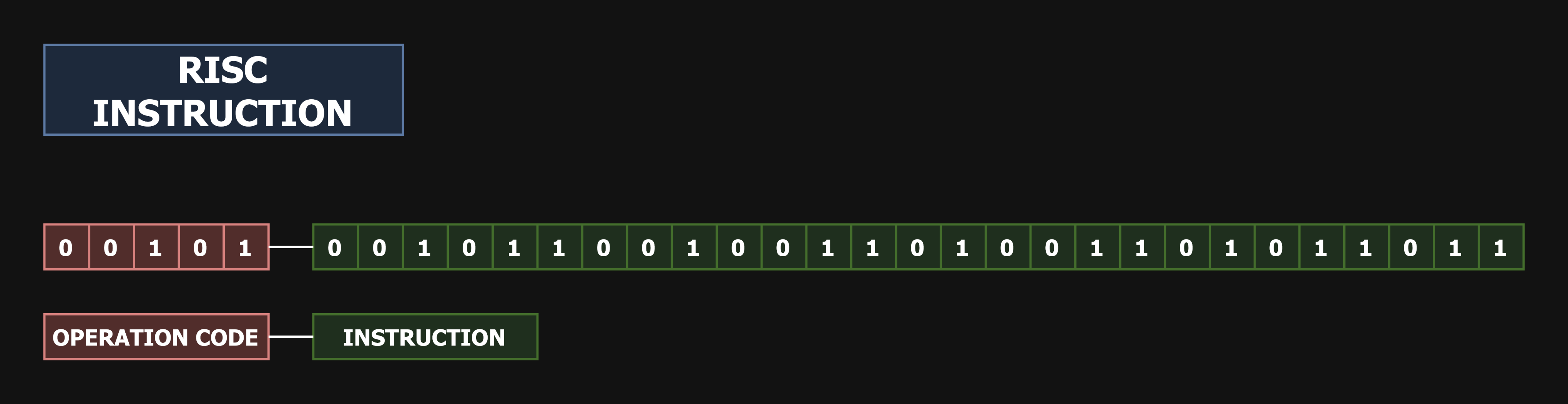

Another reason for the low power consumption and high performance of the RISC architecture is the instruction set design. Instruction set instructions in the RISC architecture are of equal length. Since the processor knows the opcode on the incoming instruction and the number of bits on the instruction, it performs operations in a much faster and optimized way. This improves processing speed and power efficiency. At the same time, RISC usually does not operate directly on main memory. It retrieves data from main memory, executes instructions on registers in the processor and then restores the processed data to main memory. This reduces the number and duration of memory accesses, which improves performance and saves power.

Use main memory only for LOAD and STORE instructions. Let other operations be handled between registers.

This restriction will simplify the design.

- David Andrew Patterson

32-bit RISC Instruction

The diagram above shows a typical 32-bit RISC instruction. While this instruction structure varies from processor to processor, the sharpest difference from CISC is that the opcode is shorter and the instruction is longer. The main design goal of the RISC architecture is to make the instructions in the instruction set short and specific so that each instruction can be completed in a small number of processor cycles (usually one). This saves power and simplifies processor design.

The simplicity of the instruction set and addressing modes allows most instructions to run in a single

machine cycle, and the simplicity of each instruction guarantees a short cycle time. Also, the design

time of such a machine will be much shorter.

- David Andrew Patterson

RISC Architecture Instruction Conversion

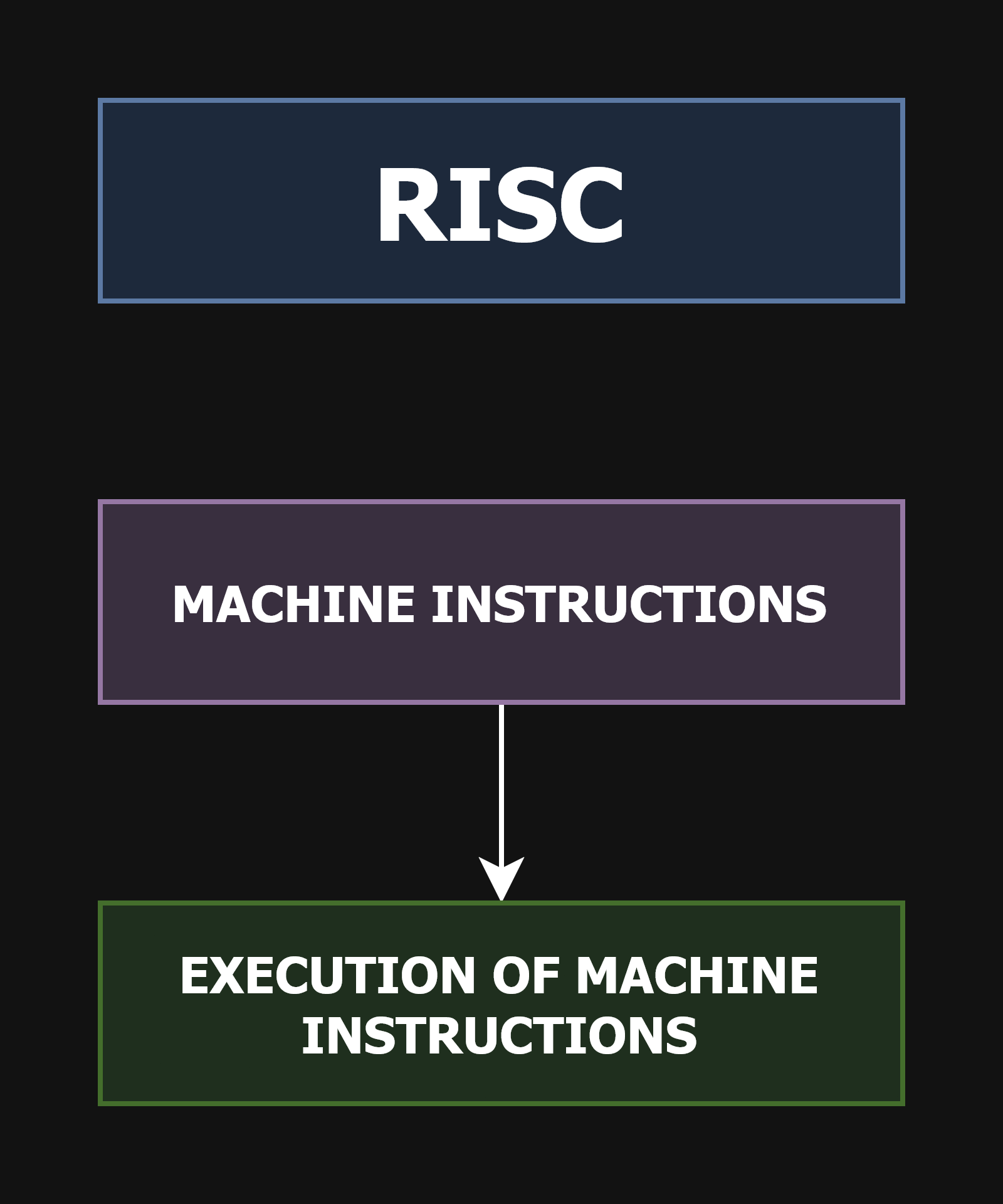

Processors with RISC architecture do not convert instructions into simpler and customized micro-operations. The instructions are executed directly by the processor. This can increase the speed of the processor, but more instructions may be required. Yes, short instructions of fixed length that complete more specific tasks can run faster, but more instructions will need to be encoded. This will increase the system's storage and memory requirements due to longer programs.

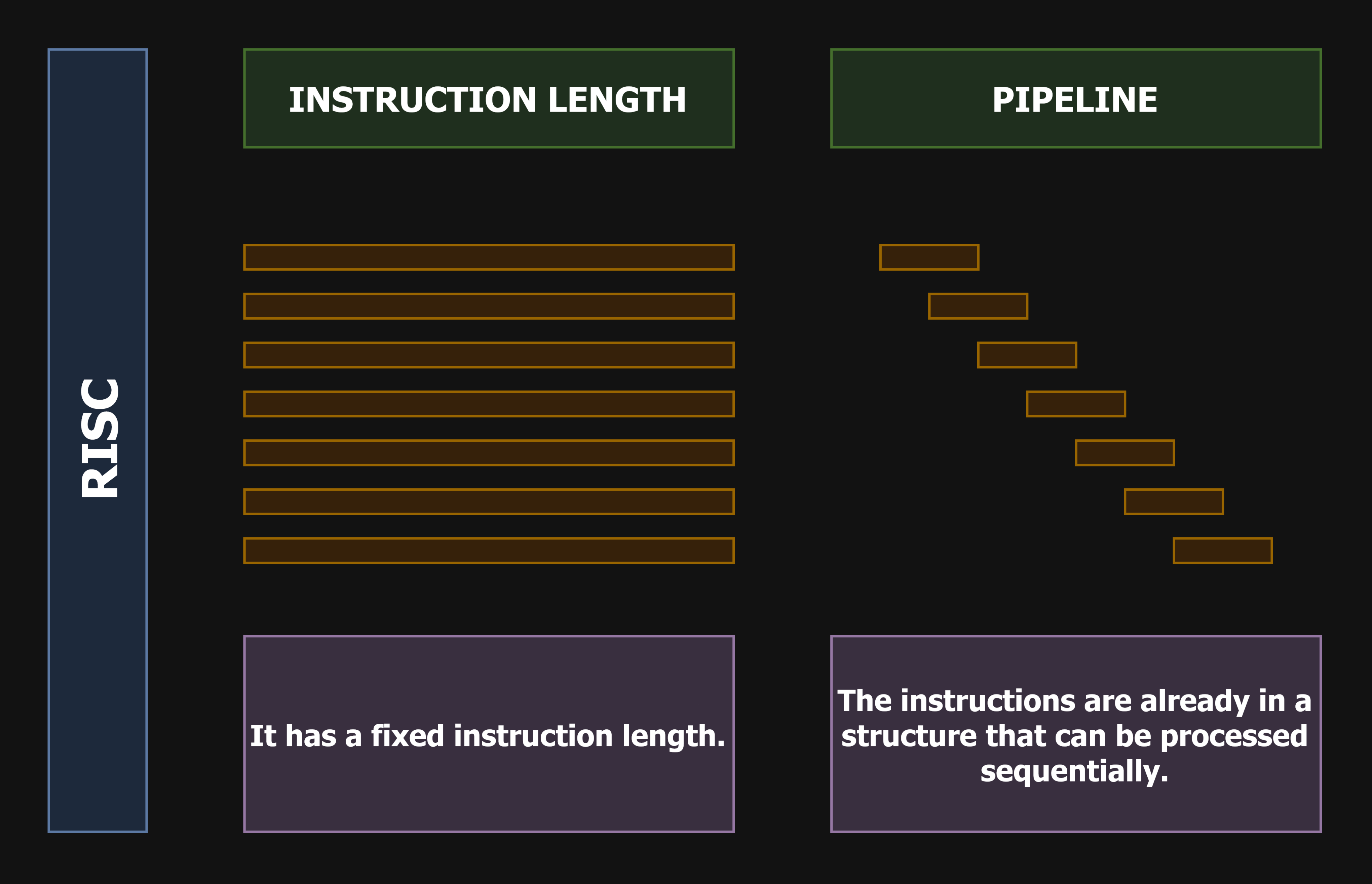

RISC Architecture Instruction Structure and Pipeline Feature

In addition to all this, Pipelining can be used in the RISC architecture without any additional effort. Pipelining is a technical process where the processor divides instructions into stages and each stage is allocated to process consecutive instructions. Each stage performs a different operation. As consecutive instructions pass through these stages, multiple instructions are processed at the same time. Thus, more than one operation can be completed at the same time.

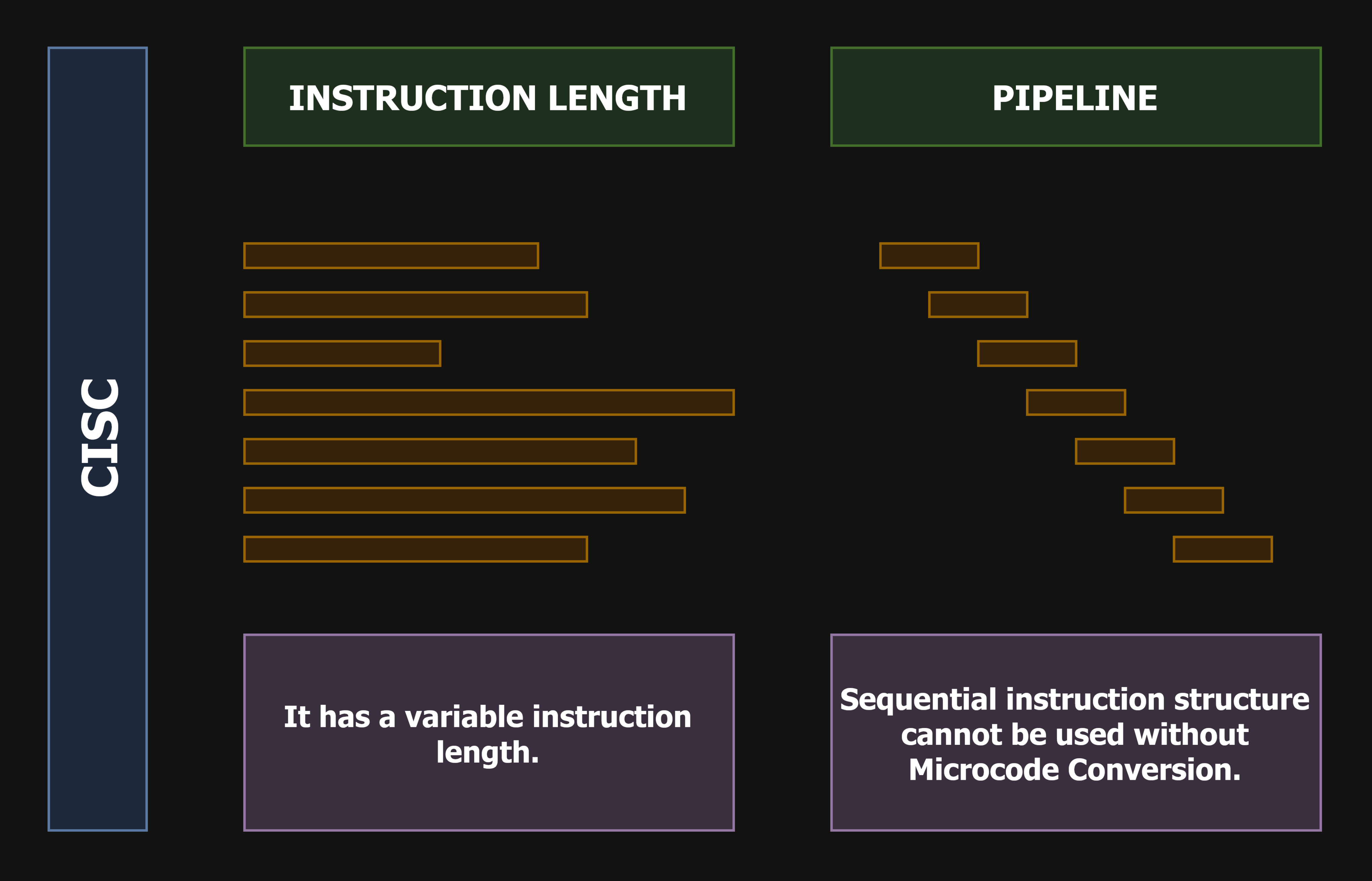

In RISC architecture, since the instruction lengths and structures will be the same, it will be much more practical for the processor to use the pipelining feature. This is because the processor with RISC architecture knows very clearly on which bits the opcode and instruction will be located. It sequences and executes operations and stages accordingly. The CISC architecture also supports pipelining, but it must first be converted into microtransactions. This creates extra overhead and energy cost for the processor with CISC architecture, but it is not without its advantages.

While we are talking about CISC, I think we have talked enough about RISC. Now it is time to take a look at the CISC architecture, the design approach behind the legendary x86 architecture.

● ● ●

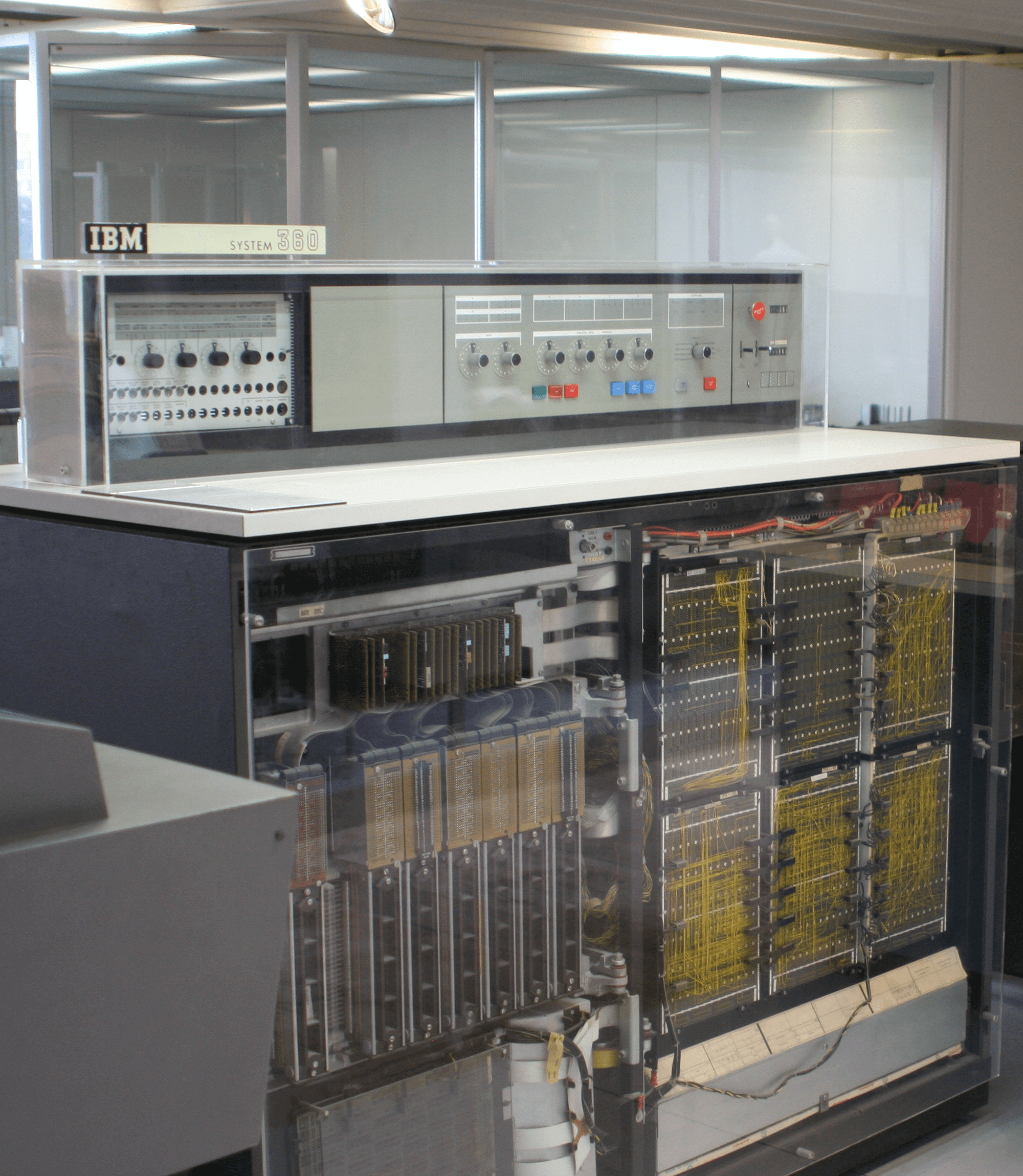

IBM S/360

IBM (International Business Machines) introduced the world's first CISC (Complex Instruction Set Computing) architecture IBM S/360 computer system in 1964 and continued to sell it until 1978. During this fourteen-year period, it dominated the computer market and led the way in setting certain computer industry standards.

IBM S/360, which was produced with the aim of providing high performance, compatibility and reliability, was offered for sale with a special software package that can be used with various operating systems and software applications. Thanks to this special software package, IBM has better responded to the data processing needs of businesses and strengthened its position among the brands preferred by businesses around the world.

The IBM S/360 was a performance monster for the years between 1964 and 1978, even though it had technical specifications that would be considered rubbish in today's conditions. It had 16 32-bit capacity general purpose registers on the processor side. It could reach a processor clock speed of up to 50MHz. It had a rich instruction set and could be programmed with various processor instructions. These instructions were used to perform memory management, arithmetic operations, data processing and control functions. Due to its rich instruction set and high market share, it attracted the attention of computer programmers, and the variety and quality of applications increased day by day.

On the main memory side, although the first IBM S/360 systems had capacities between 8KB and 16KB, after the intense interest in the system, systems with hundreds of megabytes of main memory capacity were also produced. The frequency of the main memory was between 300kHz - 1MHz, which is considered quite slow compared to today's conditions but quite sufficient for the conditions of that period.

All these superior hardware and software features for the period caused the IBM S/360 system to spread like a benign tumor in the business world and became popular day by day. IBM owes its current status to this unquestionable success of IBM S/360 systems.

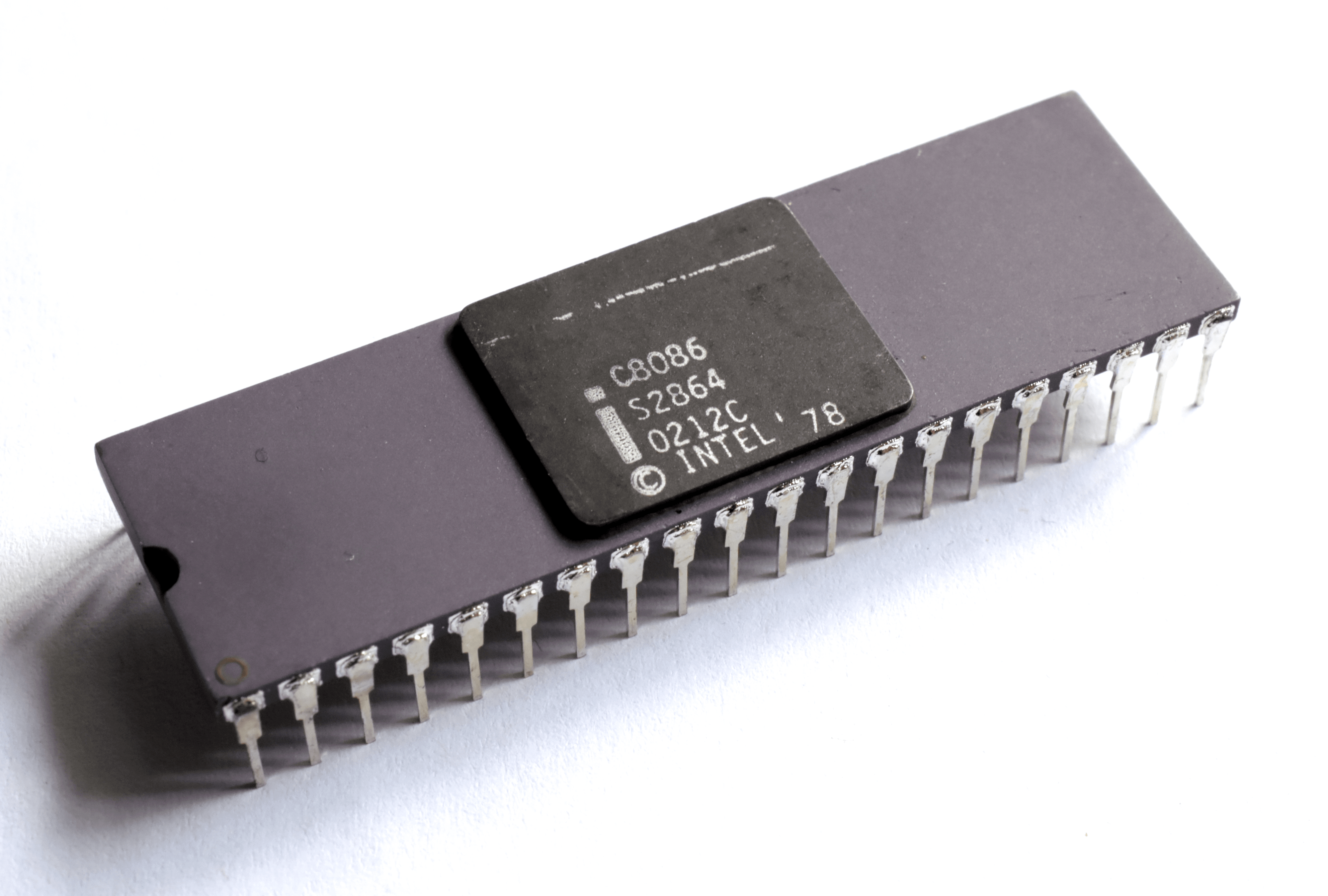

Intel C8086

The C8086 is a 16-bit processor manufactured by Intel that led to the development of the x86 architecture. The design of the processor began in the spring of 1976 and production was completed in the summer of 1978. Thus, one of the most successful processor architectures in the world was born.

The project was headed by Stephen Paul Morse. Stephen Paul Morse was more of a software expert than a hardware expert. By emphasizing the importance of a software-centered approach rather than a hardware-centered approach in processor design, he ensured that the 8086 architecture attracted a lot of attention, and in a short time, Intel gained market dominance over competitors such as Zilog and Motorola.

C8086, as I mentioned before, is a 16-bit architecture processor. It has 16-bit registers and a 16-bit data bus. The 64KB memory limitation imposed by 16-bit registers was overcome by segment registers. Segment registers are divided into code segment and data segment, and the processor uses CS (Code Segment) or DS (Data Segment) registers according to its needs. Thus, memories with capacities higher than 64KB could be used in systems powered by the C8086 processor family.

Today, most Intel-branded processors still use the "Virtual 8086 Mode". This mode provides backward compatibility, allowing old 16-bit applications to run on 32-bit and 64-bit architecture systems. In other words, the 8086 processor family still exists, if not concretely, then abstractly.

AMD and Intel

Today, there are two leading companies in the production of personal use processors with CISC architecture. Intel (Integrated Electronics) and AMD (Advanced Micro Devices). These two companies are in a very serious competition in the market.

As much as I like Intel for its contribution to technology and the high performance of its processors, I have always liked AMD's user policy more.

Intel is constantly changing the type of socket it uses on motherboards. LGA1150, LGA1151, LGA1155, LGA1155, LGA1156, LGA1200, LGA1700 and so on, so that users have to buy a new motherboard every time they want to update their processors. In the light of the analysis of these developments, it does not seem impossible that Intel may have made a deal with motherboard companies for money.

AMD, on the other hand, has an AM4 and an AM5 socket in popular use, and new processors are almost always released with support for older motherboards. Users don't have to change their motherboards for every new processor model. This is both more economical and more environmentally friendly. AMD is also the first company in the world to develop the 64-bit processor architecture called AMD64, which makes us love AMD even more.

In terms of performance, Intel and AMD occasionally top each other, but only on paper. My personal opinion, and the opinion of many other tech-savvy people with an objective point of view, is that Intel processors perform better. And the main reason for this is software design rather than hardware superiority. Most software developers design their projects to be more compatible with Intel processors. Whether this design approach is driven by system compatibility or financial gain is debatable, but it doesn't change the fact that Intel processors generally perform better. It is also important to note that Intel chipsets are less hot.

● ● ●

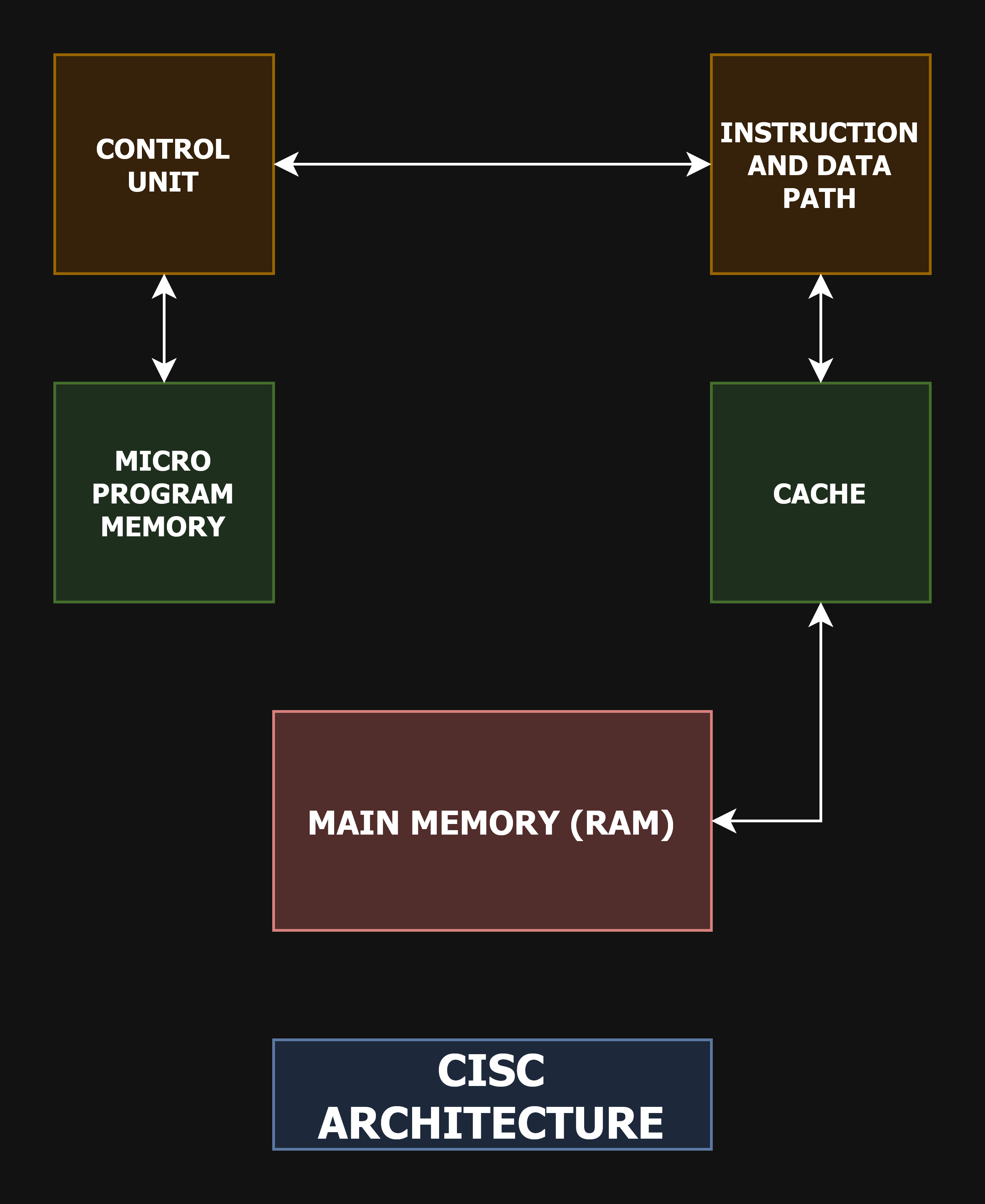

CISC Architecture

Now that we have mastered the history of the CISC architecture, we can talk about the working principle of this architecture. Please study the diagram above, even if only briefly, and try to identify how it differs from the RISC architecture.

I would like to draw your attention to a module on this diagram, the Micro Program Memory. This buffer memory is dedicated for Micro Operations (μops), which is the reason why the CISC architecture can perform as high as the RISC architecture.

Instructions and data received from RAM are first loaded into the cache. Using the Instruction and Data Bus, these instructions and data are delivered to the Control Unit. In the Control Unit, these instructions and data are divided into μops, i.e. micro-operations, and processed.

Yes, there is a time cost involved in converting instructions into micro-operations, but this time cost is negligible in terms of the performance gain after the conversion. After this transformation, it will be possible to use Pipelining for a processor with CISC architecture. This is because the instructions have been converted in a specific format and the post-conversion instruction structure is clearly known to the processor. Thus, operations can be sequenced and executed in parallel.

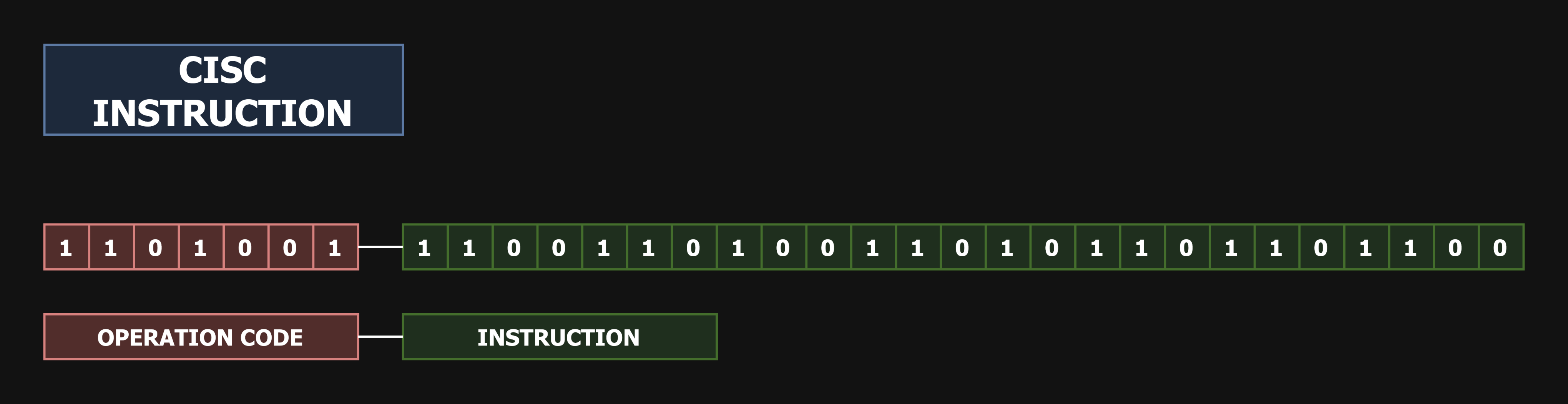

32-bit CISC Instruction

The diagram above shows a typical 32-bit CISC instruction. The main difference from the RISC instruction is that the opcode is longer and the instruction length is variable. A longer instruction will negatively affect performance, but it will make the program shorter. This will reduce memory and storage requirements.

To clarify the issue further; in CISC architecture, specific instructions are not required for each operation as in RISC architecture. Therefore, the instructions in the CISC architecture may be long, but the program will be much shorter. Yes, the instructions will not be as regular as in the RISC architecture, but since the program will be short and μops, i.e. micro-operation conversion will be performed, high performance can be achieved in the CISC architecture. However, the power consumption will not be as efficient as RISC.

CISC Architecture Instruction Conversion

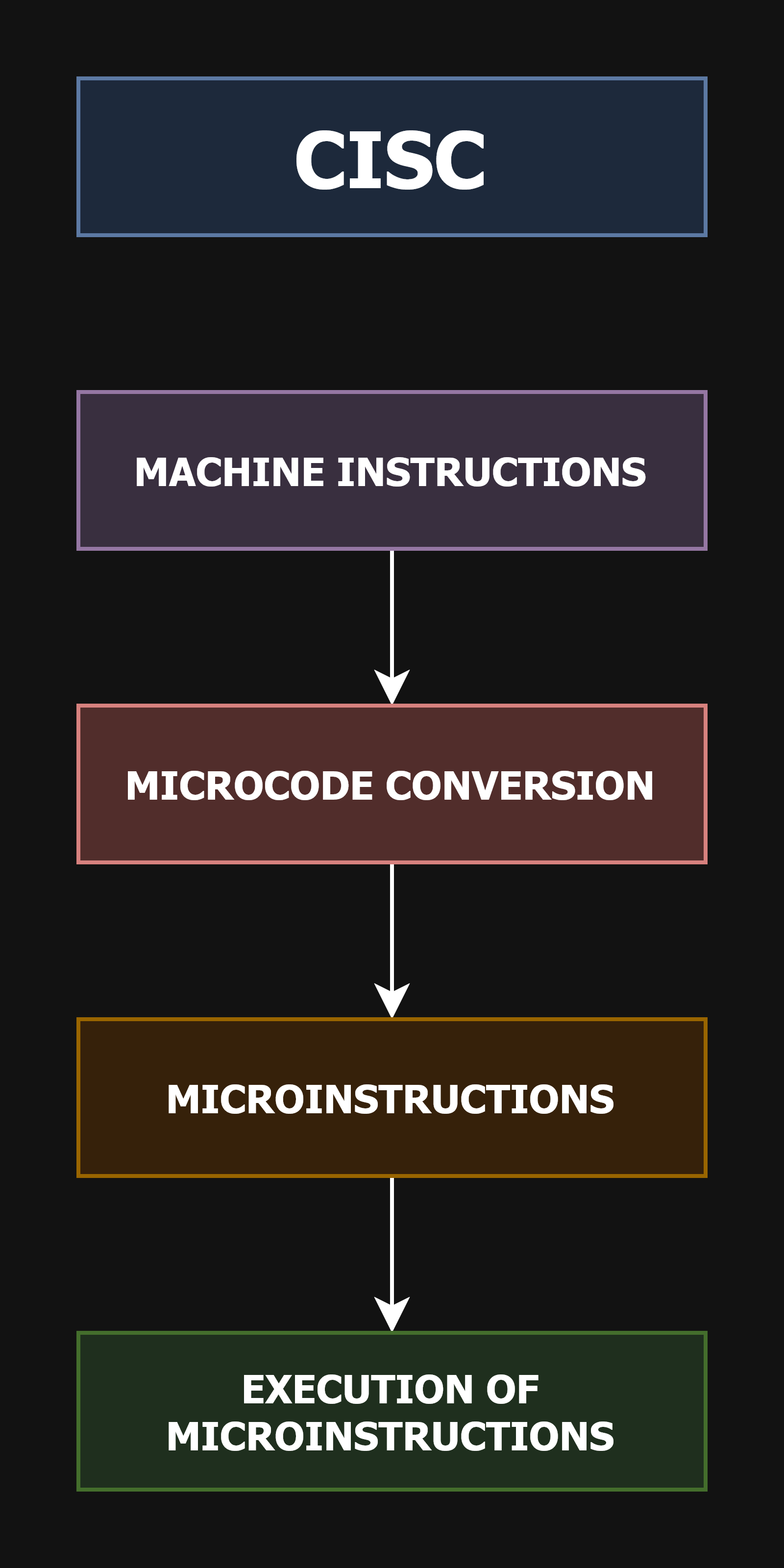

Unlike the RISC architecture, machine instructions in the CISC architecture cannot be executed directly. Since the length and structure of the instructions can be different, Microcode Conversion is performed to achieve a structure similar to the optimization of the instruction structure in the RISC architecture.

After the Microcode Transformation is performed, the resulting code stack is converted into microtalks according to the design of the processor. These microstates are executed. Each microtalimat can usually be completed in one processor cycle. This allows for fast and parallel processing.

CISC Architecture Instruction Structure and Pipeline Feature

Unlike RISC processors, CISC processors can have different instruction lengths. Different instruction sizes make the program shorter, but make it more difficult for the processor to extract instructions. At the same time, these instructions of different lengths make it impossible to use the pipeline feature. This is because with instructions of different lengths, the location of opcodes and instructions will vary, making pipelining between instructions impossible.

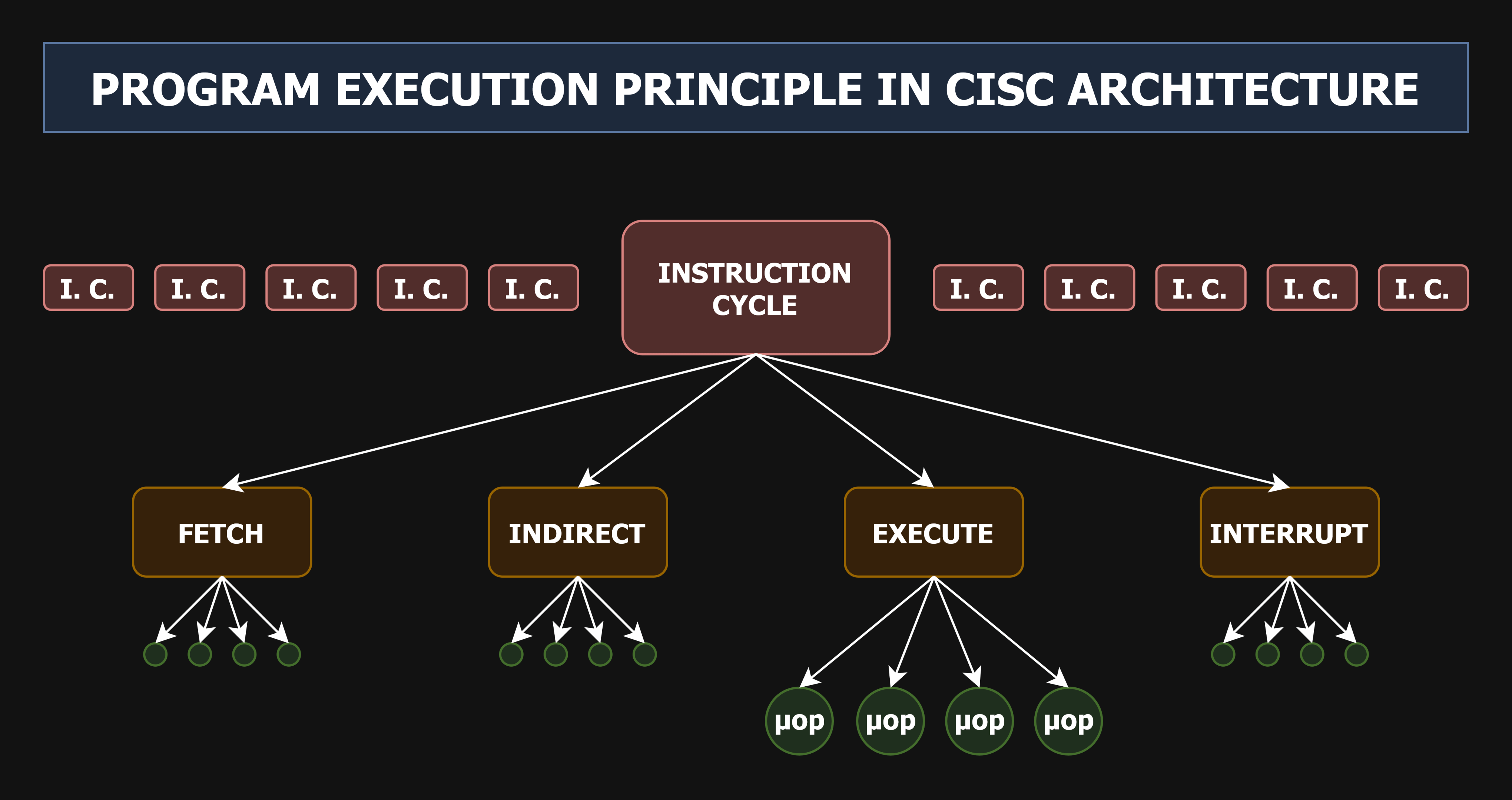

Program Execution Principle in CISC Architecture

In order to overcome this inconsistency in instruction size and structure, the Micro Operation (μops) approach was adopted. As can be seen in the diagram above, the instruction cycle under consideration is divided into parts. The fetch, indirect, execute, execute and interrupt parts are then broken down into even smaller Micro-Operation parts, which can usually be completed in a single processor clock cycle.

This results in a structure similar to the instruction structure in the RISC architecture and makes the pipeline feature available. At the same time, the cost of time spent to achieve this structure is offset by the performance achieved.

● ● ●

Even a million transistors can be insufficient when an entire computer has to be built from it. This

raises whether the extra hardware required to implement the CISC architecture is the way to make the

best use of these scarce resources.

- David Andrew Patterson

David Andrew Patterson made the above remarks as a result of questioning the increasing cost of CISC processors and the time-consuming complexity of their design.

Processors with CISC architecture supported a large number of complex instructions and used many transistors to execute each instruction. For this reason, processors with CISC architectures usually contained more transistors and incurred higher hardware costs due to their complexity. Between 1970 and 1980, processor designs had already become quite complex and costly. As a result of these reasons, the RISC approach was introduced to produce processors with simpler design and lower cost.

Today, however, this is not exactly the case. Today, the terms RISC and CISC are no longer used as a precise classification, but rather to describe a mix of different techniques used in processor design. Today's processors use a mixed RISC - CISC structure to reduce complexity and increase performance. Exactly which architecture a current processor adopts is therefore related to its model and generation.

Also, the CISC architecture is outwardly more complex for programmers, but has a richer instruction set. This means that processors with CISC architecture have a large instruction set for programmers and a rich processor capability. Processors with CISC architecture can perform multiple operations in a single instruction to perform different operations. This allows programmers to code programs to handle complex operations more easily.

On the other hand, the RISC architecture has a simple and straightforward instruction set. This requires programs to use multiple instructions to perform different operations. This increases the memory requirement, but the power savings and high performance brought by the simplicity of the hardware structure of the processors with RISC architecture enable these coded programs to run with high efficiency.

Both processors support pipelining, but processors with the CISC architecture need to reduce instructions to Micro Operations for parallel and fast processing. Processors with RISC architecture can execute machine instructions directly. Because the format and length of the instructions are standardized, their structure is clearly known by the processor.

As you can see, both processor architectures have advantages and disadvantages. CISC processors are mainly used in general purpose computers, while RISC processors are used in areas where energy efficiency is important, such as servers and portable devices.

All modern Intel and AMD processors today have RISC-like cores interfaced to decoders to translate CISC

instruction-style x86 instructions. It is wrong to ask "Which is more popular?" or "Which is more

successful?" for either processor. The correct question is "Which is better suited for X?". "X" can be a

use case or a specific purpose.

- Quora User

● ● ●

Finally, I have compiled information from a forum discussion that profoundly influenced my opinion about processors with RISC and CISC architectures. I would like to share this information with you below:

Today, above all, the speed of execution of individual instructions has only a very small impact on the overall execution speed. Execution speed now depends heavily on cache capacity and caching capability. The clock speeds of processors have increased almost to the point where power consumption and temperature limits them. This keeps the clock speeds of processors with RISC and CISC architectures at roughly the same level.

Today, RISC and CISC processors spend about the same average time per instruction. As transistor budgets expand over time, CISC processors, like RISC processors, handle most instructions in fixed sizes.

Today, the ability of CISC processors to translate instructions into micro-operations has also improved considerably. Although x86 instructions are not so simple to decode, the number of transistors used to decode instructions is no longer so important. Typical instruction decoders in today's processors have evolved to the point where they can convert three instructions per processor cycle. These instruction decoders account for less than one percent of the transistors in a system. This means that RISC and CISC processors today are at almost the same technical level.

Today, however, the RISC architecture has major problems. Because of the simplification and magnification of instructions to keep the design simple, in a typical case, we can expect an x86 code to be about fifty percent shorter than the equivalent code for a RISC design. This means that a processor with a RISC architecture will use about twice as much bandwidth for main memory to fetch instructions as a processor with an x86 architecture. It also means that a RISC processor needs an instruction cache about twice the size of an x86 processor to achieve the same performance.

● ● ●

Reading this discussion above has completely changed my perspective on RISC and CISC architectures. And I have to point out that if there is a comparison between things in any field of science, it is important not to miss the fact that these things usually have their own advantages and disadvantages. In this case, we can safely say that RISC and CISC are two very important processor design approaches for humanity.

David Andrew Patterson